C1. Reliable, Scalable, and Maintainable Applications - Part 2

Designing Data-Intensive Applications: The Big Ideas Behind Reliable, Scalable, and Maintainable Systems

Hello 👋 ,

Welcome to a new post! We will continue our notes from Part I where we discussed reliability. In this Part II, we discuss scalability which is essentially characterised by load parameters, performance measures, and managing associated resources. So, let’s get started and understand what these terms mean and how are they related with one another.

Scalability

What is scalability?

Scalability is the system's ability to cope with increased load

We need to define load first to call a system scalable. Without the definition of load it is meaningless to say "X is scalable".

For example, if data grows we need to think about how are we going to scale storage layer. If number of users grow how are we going to scale number of connections.

Describing Load

What is a load?

A load can be described with a few numbers which are called load parameters

Load parameters are selected based on application and its architecture

For example, for a popular blog the reads are going to be much higher than writes as writer might publish once a day but thousands of reads will be there as readers come to read. In this example, when talking about scalability we can consider the number of reads as load parameter.

Another example, application logs are generated at much larger rate (write heavy) but they are consumed rarely, like, debugging scenarios. In this case, the load parameter can be number of writes.

The book covers a little complex example of twitter which I omitted for simplicity purpose (will be covered in chapter 12). But don't worry we will be covering Twitter's system design in fairly detail in my new series on system design interview questions. Stay tuned!

Describing Performance

Why do we need performance numbers?

To test what happens when you increase load on your system we need to answer following two questions

When you increase a load parameter by keeping resources (CPU, memory etc.) constant how performance of system affected?

When you increase a load parameter how much resources you need to maintain the systems performance

But what are performance numbers?

It depends. In a batch processing system like Hadoop/MapReduce it is throughput i.e. number of records processed

Generally, in service calls or websites the performance number is response time - the time between a client sending a request and receiving a response.

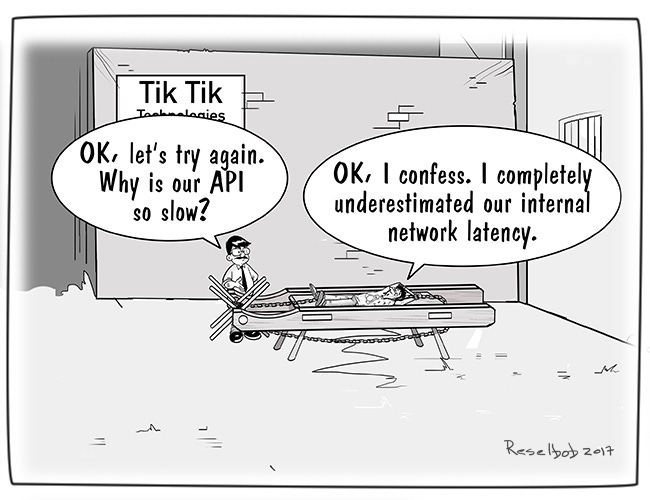

What is the difference between latency and response time?

Response time is what the client sees = processing time + queuing delays + network delays

Latency is the duration that a request is waiting to be handled

How to visualize the performance numbers?

We continuously collect performance numbers, e.g. response time of each request is recorded and plot them on a graph called as distribution.

The distribution of values is not uniform even for the same request. A same request again might take more or less time than previous one due number of uncontrollable factors such packet drops, queueing delays at router etc.

Hence, the large number of data points are summarised by applying some aggregation function such as average (or mean). For example, for each 1 minute window average all the response time and plot a single data point for that minute.

Why average is a bad measure for performance numbers?

The outliers, the values which are really large or really small compared to rest of the data sets, have bad impact on averages and averages then don't represent entire set correctly.

For example, average for {1, 1, 2, 4} is (1 + 1 + 2 + 4) / 4 = 2 but for {1, 1, 2, 4, 12} is (1 + 1 + 2 + 4 + 12) / 5 = 4. By just adding one outlier average doubles.

What is median and how to compute it?

Median on other hand is immune to outliers, because it ignores it!

To compute median of a list sort the list ascending and select the middle element.

For example, {1, 1, 2, 4, 10} the median is 2.

What is a percentile?

Percentile is a rank of number in a sorted list. A median is 50th percentile.

For example, if we have 200ms as 50th percentile then it means 50% of the users are experiencing more than 200ms response time and rest are 200ms or less.

What percentiles to look at when measuring performance?

Consider a case where user makes 10 parallel requests to the service. The effective response time (RT) to the user can be computed as MAX(request_1_RT, request_2_RT, ..., request_10_RT). A request is as slow as its slowest component.

Assuming 50th percentile is 200ms, so the probability that at least one of the 10 request is slower than 200ms is 99.9%+. This effect is known as tail latency amplification on higher percentiles.

How did we get 99.9%?

Probability of event happening = 1 - Probability of that event not happening.

Hence, Probability of request being slower than 200ms = 1 - (Probability of request not being slower than 200ms)^10 = 1 - 0.5^10. Raise to the power 10 is because there are 10 parallel requests.So we generally use p90, p99, p99.9 for measuring performance. Higher percentile latencies (99, 99.9 etc.) for response time are called tail latencies.

At what level big enterprise such as Amazon try to optimize their systems?

They measure at p99.9. That means every 1 out of 1000 requests are affected.

Optimizing beyond p99.9, such as p99.99, is costly and can be affected by random events which are out of our control

How performance numbers are used?

When two services decides to work together and call each other's API they also define their performance numbers as Service Level Agreements (SLA) and Service Level Objectives (SLO).

If one service violates the contract, such as p50 will be under 200ms and p99 will be under 1s, the other can hold it accountable.

Why is it important to measure response times on client side?

It takes only a small number of slow requests to hold up processing subsequent requests - called as head-of-line blocking.

If those subsequent requests can be processed fast by the server, the server metrics will report everything is good. But for the same requests, client had to wait additionally due to head of line slow requests. Hence, measuring client side response time is important.

Approaches for Coping with Load

What is vertical scaling?

Increasing a system's configuration such high end CPUs, adding more RAM, GPUs etc. is called vertical scaling.

Traditionally apps were deployed on a single powerful server. But these systems quickly become expensive and they may not get utilized to full extent.

Nonetheless, single server apps are easy to manage.

What is horizontal scaling?

Rather having a powerful hardware machine, a small set of inexpensive boxes work together to achieve given task.

Most of the heavy lifting around state management and coordination is done at software or application layer.

This mode allows us to incrementally scale up or scale down by adding or removing boxes respectively. Hence, it is cost efficient and machines can be utilized fully in their course of operation.

What is elastic & manual scaling?

Scaling where resources (compute or storage) are automatically added without manual intervention is called elastic scaling. For example, Amazon EC2 Auto scaling group.

Elastic scaling is useful when load is highly unpredictable

Manual scaling systems requires estimation, planning, and procurring hardwares manually as the need arises. Sometimes this may be the simpler and operationally less surprising approach.

It is conceivable that distributed data systems will become the default in the future, even for use cases that don’t handle large volumes of data or traffic. Over the course of the rest of this book we will cover many kinds of distributed data systems, and discuss how they fare not just in terms of scalability, but also ease of use and maintainability.

Is there a generic pattern for scaling applications?

There is no one size fits all architecture pattern for designing & scaling applications. For each application, load params needs to determined and then in which dimension the system needs to be scaled to be decided.

Though scalable architectures are built for specific applications but they are built from general purpose building block which will be discussed in this book.

That’s it for this post. See you in the next post there we will discuss the last part of this chapter “Maintainability” 😮.

Liked the article? Please Share & Subscribe! 😀

Possible Typo

Welcome to new a post! ---> Welcome to a new post!